Artificial intelligence and machine learning permeate many aspects of your everyday life. Now they’re creeping into music production, performance, and DJing, and making the formerly impossible possible. We grilled the experts on what machine learning can and will do to change the game of computer music. Should DJs and music producers welcome it as a liberator, or fear it as a usurper of your job behind the decks? Spoiler alert: You’re going to want a better laptop.

Here Come The Machines

Every time you do a Google search or use Google Maps, Siri, Shazam, you benefit from artificial intelligence (AI) and machine learning. The trickle of AI-assisted tools in everyday life is quickly becoming a deluge. Machine learning has begun to enable rapid advancements in text and image recognition, automated translation, and medical diagnoses, just to name a few areas.

More and more, machine learning is also affecting the world of music technology. The possibilities for simplifying tasks, enhancing creativity, and fixing mistakes in music production and DJing fascinate the imagination. It’s already being used to determine the key of a track and perform key lock, remove unwanted parts of audio recordings, automate aspects of audio mixing and mastering, create a new type of synthesizer (the Magenta NSynth neural synthesizer), and to “unmix” the each part of drum loops.

Could AI someday even take your job as a DJ?

In the not-so-distant future, it’s easy to imagine a machine fixing a trainwreck mix in a recorded DJ set, make acapella and instrumental tracks out of a stereo audio file, and even become a virtual bandmate in your music production. Could AI someday even take your job as a DJ? Let’s slow our roll on that one for now.

What Does Machine Learning Do?

On a basic level, it’s fair to say that algorithms are the engine of machine learning, and data is its fuel. A subset of AI, machine learning employs algorithms to process huge datasets fed into computer networks, which determine patterns that allow them to process new input data.

Even experts […] have a hard time explaining how deep learning actually works

The next level of machine learning, called “deep learning,” stacks neural network layers — loose approximations of a human brain — to process more data than a human brain could. Even experts working in the field have a hard time explaining how deep learning actually works. For example, deep learning has improved the speech recognition of the Amazon Echo neural networks faster and better than the team that created it ever expected, just by people using it more and more.

What Music Tools Already Use Machine Learning?

Serato

Serato‘s Head of Development Gary Longerstaey confirmed to DJTT that Serato uses machine-learning algorithms to assist in determining a track’s musical key, and you can imagine how feeding a machine-learning network thousands of songs with the key already determined would help it find the key of new audio files.

MusicLinx

Existing songs make up the data behind other machine learning music applications, as well. The creator community app MusicLinx uses successful and trendy songs plus machine learning in its Smart Songwriting feature to show writers how their songs match up to what’s in demand. “We believe understanding how your music works in the current musical climate can definitely be pivotal in creating a successful record,” said MusicLinx founder Larry Mickie.

LANDR

LANDR

The online music mastering service LANDR would never exist without AI and machine learning, as well as the thousands of songs that informed its system during its eight years of research and development.

“we gathered tens of thousands of professional mixes from a super-wide variety of styles, and analyzed them according to hundreds of production features and characteristics. Then we closely studied the results of the mastering jobs done by human engineers on those same tracks.” – LANDR CEO Pascal Pilon

By tracking the decisions made and processes applied by human engineers, LANDR established processing systems that vary according to the music it receives. “We apply a custom chain of treatments and tools, exactly like what human sound engineers would do,” Pilon said. “Now that we’ve mastered close to 6 million songs, the process as a whole keeps self-improving. The more it masters, the better it gets.”

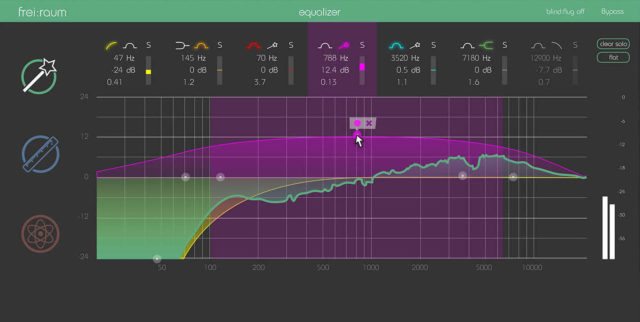

Sonible

While more quality data helps to improve machine learning’s results, it runs up against limitations in the music space because there is not always a “correct outcome” for an artistic creation. For example, Sonible uses machine learning “smart filters” on its smart:EQ+ and frei:raum plug-ins to automatically set EQ levels based on probabilities for the best frequency levels. However, as Sonible’s CEO notes, its smart filters are based on typical levels, but typical is not always best.

“Machine learning can only be as good as the database it is built upon, and this database has to be correct,” he said. “For certain mixing tasks, there is no 100% ground truth, so we always have to work with subjective trade-offs in the training of our algorithms.” – Alexander Wankhammer, Sonible CEO

What’s Holding Machine Learning Back?

The Accusonus Regroover plug-in takes full drum beat loops and “unmixes” them into their separate parts using machine-learning algorithms. You can then remix, rearrange, and effect those parts, or chop parts into one-shot sounds on a pad matrix. Results vary depending on the length of the loop and how repetitive the individual drum parts are. Sometimes you’ll get completely clean kick, snare, and other tracks, while other times you’ll get partially separated layers than open up other creative possibilities.

Accusonus co-founder and CEO Alex Tsilfidis said that, beyond CPU and programming challenges, more could be done for music production if DAW software got rid of the “single-track paradigm,” where changes to one track of a song don’t affect the other tracks:

“We all know that the settings and effects in your bass track directly relate to how you’ll handle your kick track and vice versa,” Tsilfidis said. “But a plug-in on your kick track cannot access the data in your bass track. Intelligent new algorithms need access to all tracks simultaneously, and today’s DAWs and plug-in formats don’t offer that by default.”

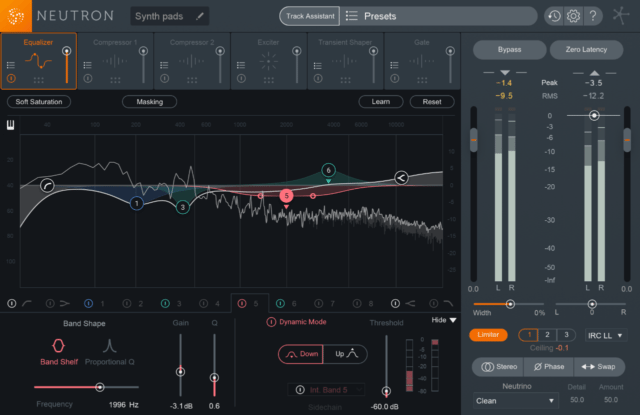

One of the leaders in machine learning for music production, iZotope, has begun to address that problem with recently released updates to its flagship mixing and mastering plug-ins, Neutron 2 and Ozone 8. They use inter-plug-in communication to access all the instances of those plug-in in a session. Neutron 2’s Track Assistant also uses machine learning to automatically identify the type of instrument on a track and to set an “adaptive preset specific to your audio” based on the kind of sound you’re after.

The iZotope RX6 Advanced audio repair software uses processing based on machine learning to analyze the spectrogram of audio recordings. This allows their tools to isolate dialog from outside noise, remove microphone handling noise and other “mic rustle” sounds, and more. The tools help save recordings that otherwise would need to be scrapped, but they are very CPU intensive. iZotope Senior DSP Reasearch Engineer Gordon Wichern thinks the CPU load has made the pro audio industry slower to adopt machine learning. “It’s a very latency- and CPU-sensitive industry,” he said.

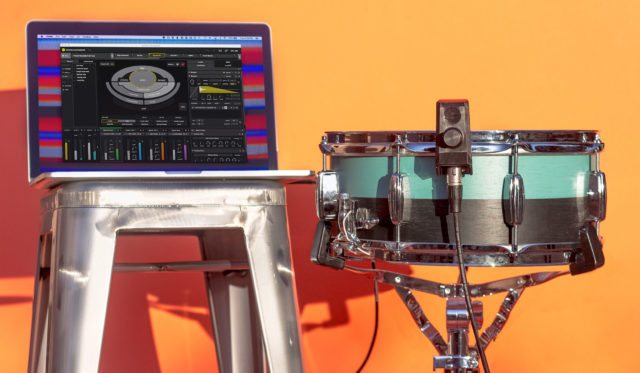

Most of the professionals I interviewed for this article agreed that the most intense machine-learning processes would tax the best CPUs currently available. Audio applications often need to run in real-time, and the majority of machine learning algorithms in the world are not intended for real-time, according to Tlacael Esparza, co-founder of Sunhouse.

His company’s Sensory Percussion combines software with drum sensors to turn an acoustic drum kit into a wildly expressive and expansive electronic instrument. The software uses machine learning and DSP to translate the sound from different parts of each drum into a spatial map. Triggering different spots on the map with a strike can launch different audio samples, blend multiple samples, or control sound parameters.

“With real-time audio, you have about a millisecond to do a calculation, so it takes a lot of creative engineering,” Esparza said. “The faster the computer, the more you can do. The more channels you can run and effects you can have.”

Where Is Machine Learning Headed?

Leaps in CPU power, perhaps from the fabled quantum computing technology that always seems to be on the horizon, could rocket machine learning for audio to new heights.

iZotope’s Wichern noted that unlimited computation could allow machine learning algorithms to learn from audio waveforms at typical sampling rates of 44.1kHz and 48kHz. Current audio machine learning techniques use low sampling rates like 8-16kHz, or convert the audio to a graphic such as a spectrogram. “This could allow many exciting new interactions with audio machine learning systems that no one has thought of yet,” he said.

Perhaps it could help with “singing voice separation,” the industry term for separating vocals from a fully mixed song. This process is underway, but the results are not quite there yet. You can hear the state of the art for signal separation, which includes breaking down a fully mixed song into vocals, drums, bass, accompaniment, and “other” tracks, at the Signal Separation Evaluation Campaign site, and speculate how far we are from fully demixed music.

Perhaps it could help with “singing voice separation,” the industry term for separating vocals from a fully mixed song. This process is underway, but the results are not quite there yet. You can hear the state of the art for signal separation, which includes breaking down a fully mixed song into vocals, drums, bass, accompaniment, and “other” tracks, at the Signal Separation Evaluation Campaign site, and speculate how far we are from fully demixed music.

“Machine learning is advancing so rapidly, we can expect music separation to continuously improve,” Wichern said.

Since iZotope already uses machine learning to save audio recordings that otherwise would have to be re-recorded, Wichern could see the underlying algorithms being configured to repair mistakes in recorded DJ sets. “It’s just a matter of collecting the training data, both pristine and botched sets,” he said.

[Before adoption] there needs to be solid user-facing benefits that make life easier for DJs

Yet how likely is all of that legwork to happen? Serato’s Longerstaey said, “there needs to be solid user-facing benefits that make life easier for DJs before significant investment is undertaken in this space.” He also mentioned that machine learning could be used to make song recommendations for DJs based on the characteristics of their track selection history.

While the Automix functions on DJ software today are ripe for ridicule, it’s easy to imagine machine learning making automixing much more natural. and tailored to each particular mix, as well as make the track selection order tasteful, smooth and informed by current trends.

Would [getting a DJ gig] get even worse when a robot could take your job?

We all know how competitive it can be to score DJ gigs now. Would it get even worse when a robot could take your job? Well, besides software companies not wanting to innovate their customers out of work, most of my interviewees picture a future where better machine learning functions help their human counterparts by saving them time and helping them be more creative, rather than replacing them.

For example, iZotope is not interesting in fully automating the mixing process, because that would be uninspiring and dismissive of human ingenuity.

Pilon of LANDR said there’s no limit to how sophisticated and configurable its mastering processes can get over time, but that it will always rely on the tracks it receives being mixed well in the first place. “When mastering engineers receive a poorly mixed version of a song,” he said, “they often act as quality assurance. They can either give feedback or try to fix some of the poor sections of that mix. It’s really the artistic element of a sound engineer’s job. Those engineers will always have that edge of taking liberties with the content that LANDR wouldn’t.”

In one of his articles on machine learning, Mike Haley, the Machine Intelligence leader at Autodesk software, said we’re in the “low-hanging fruit” era, where machine-learning tools handle basic optimization and error-correction. In the next phase, “intelligent assistants” will learn how you work and handle repetitive tasks to save you time and free you up for creative aspects.

Wankhammer of Sonible explained that when that next phase comes, the smart filters on their EQ plug-ins will become “personalized” and predict each user’s preferences based on the specific audio signal. “This means that algorithms will learn from their users,” he said. “The better the audio engineers are at their job, the better trained the plug-ins will be. It sounds funny, but it’s a potential scenario.”

Esparza went even further with his vision for machine learning enhanced computer music. Sensory’s Percussion long-term goal is:

“for a computer to listen to a musician, and understand what the musician is doing as another musician would. So it knows where the downbeat is, the tempo, the identifiable repeating rhythms, and it can anticipate structural changes. It knows when the chorus is coming because it can parse music in real time as another musician can. That will allow a lot more flexibility and interesting things to happen between a computer and a musician, where we can get away from restrictive click tracks and grids, and musicians can just expressive themselves with a computer supporting them in a way that doesn’t restrict them. That’s possible for sure. Not today, but we’re working towards it.”

[Note: Pioneer DJ and Ableton declined to comment for this article, and Native Instruments did not respond to our inquiries in time for publication.]