I’ve been telling you this for months – AI-generated music is going to come very fast and be really good. We have just seen a giant leap forward that brings us much closer to completely changing the way the music world works. Google has teased a new generation of AI music generation tools that appear to be really exceptional – with one massive potential catch.

First, let me save you some time: we (the everyday DJ and music producer) can’t use these tools – yet.

Google’s Deepmind AI team has released a series of teaser examples that demonstrate their new music AI model “Lyria” in 2 interesting use cases:

1: Generate mini songs for YouTube Shorts

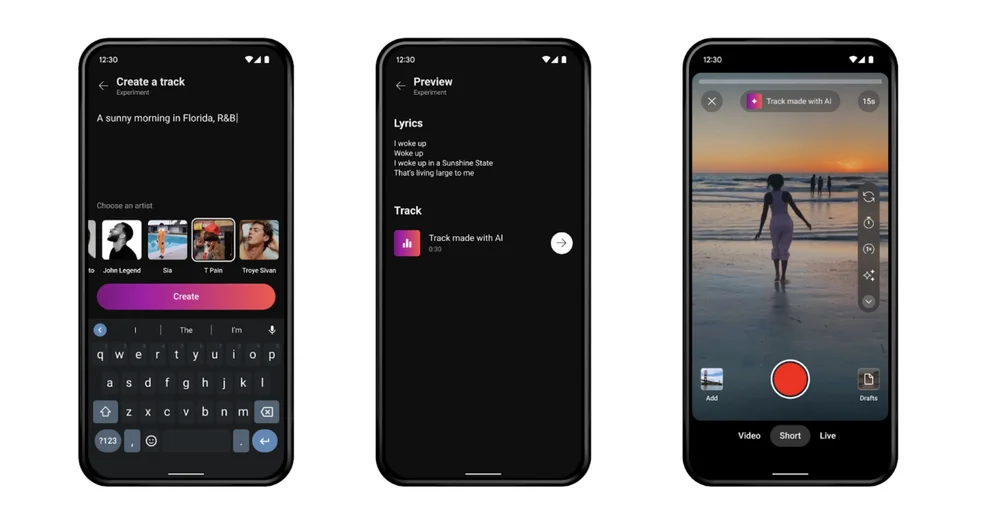

In their first example, Google’s AI team will apparently offer a tool called Dream Track – which allows:

“A limited set of creators will be able to use Dream Track for producing a unique soundtrack with the AI-generated voice and musical style of artists including Alec Benjamin, Charlie Puth, Charli XCX, Demi Lovato, John Legend, Sia, T-Pain, Troye Sivan, and Papoose.”

The examples they provide are pretty compelling. I instantly wanted to create a custom video about my ex set to the style of T-Pain about how they broke my heart. lol – guess I have to wait a minute on that one…

The examples they provided are limited but some of the best demonstrations yet with AI-generated music files:

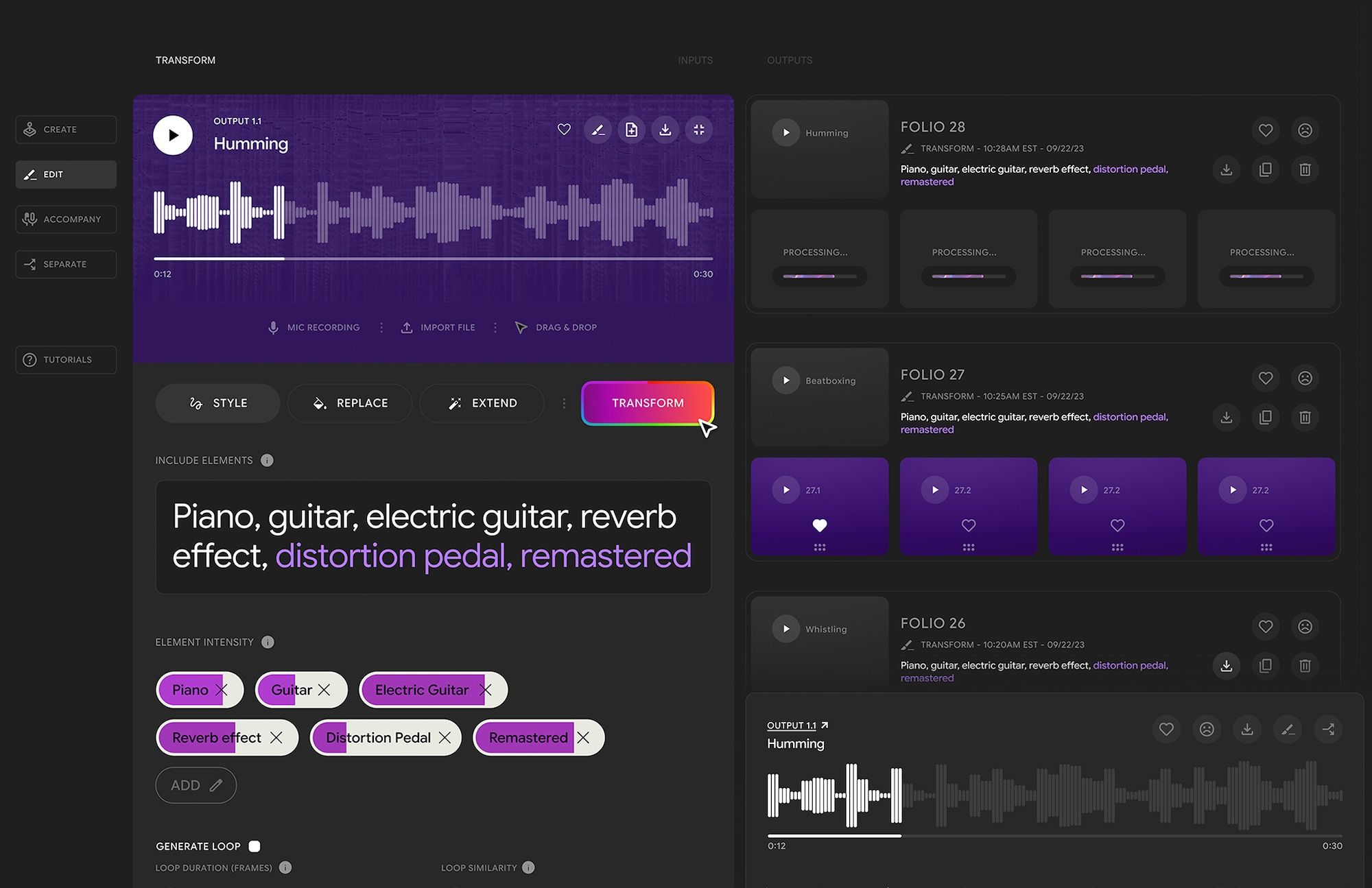

2: Create, edit, and re-arrange songs with music-generated AI tools.

Google announced they will be creating a new set of tools that go beyond just music generation and into AI music production and editing.

“With our music AI tools, users can create new music or instrumental sections from scratch, transform audio from one music style or instrument to another, and create instrumental and vocal accompaniments.”

This technology may put current DAWs like Ableton, GarageBand, out of business unless they develop their own AI music generation tools.

Why? They simply will not have access to the most important layer of music, its original generation context. Let me explain:

When Google generates a song, they should be able to generate layers, which you would then want to separate and manipulate. If you take a fully generated audio file into GarageBand, then separating out the parts is possible – but very, very crude. People will want to edit these things at the point of creation.

Simply put – Own the model and you own the process.

What’s the “MASSIVE” catch? It has to do with copyright.

Here is the big one. Google reports they have developed a digital watermarking technique called SynthID that stays with an audio file no matter what format it is converted into. It seems plausible this watermark not only demonstrates the music was generated by Google AI but also “what prompts were used in its creation”.

Two possible outcomes from this technology include:

- Artists actually get paid and will likely have rights to your material.

If you generate a song that is in the “style of John Mayer” and it ends up being a hit, this could be a mechanism for triggering a lawsuit from Mr. Mayer’s attorneys. - Google would likely claim some intellectual rights.

It’s very hard for me to imagine that Google would not claim rights to anything you generate using their tools. Many social media companies effectively own the distribution and monetization rights to content you publish on their platforms, so it’s hard to imagine this would be any different.

A very likely outcome is that of any revenue collected on billions of songs that will soon circulate the internet – most, if not all of it, will be retained by the AI companies and artists that were involved in the generation of those songs.

Practically speaking – I don’t care. I just really want to make a thousand weird little micro genre’s and play them in my sets. No one makes any money on music anyway – except for the foundations of dead pop stars, of course. Most of us just want to cut through the noise, create something interesting, and go on tour to play our music.

Give me amazing free tools, the power to create, and a spotlight – Google can keep the rest.